A while ago I was working on optimizing memory use for some django instances. During that process, I managed to better understand memory management within django, and thought it would be nice to share some of those insights. This is by no means a definitive guide. It’s likely to have some mistakes, but I think it helped me grasp the configuration options better, and allowed easier optimization.

Does django leak memory?

In actual fact, No. It doesn’t. The title is therefore misleading. I know. However, if you’re not careful, your memory usage or configuration can easily lead to exhausting all memory and crashing django. So whilst django itself doesn’t leak memory, the end result is very similar.

Memory management in Django – with (bad) illustrations

Lets start with the basics. Lets look at a django process. A django process is a basic unit that handles requests from users. We have several of those on the server, to allow handling more than one request at the time. Each process however handles one request at any given time.

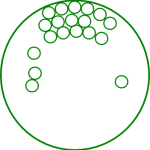

But lets look at just one.

cute, isn’t it? it’s a little like a balloon actually (and balloons are generally cute). The balloon has a certain initial size to allow the process to do all the stuff it needs to. Lets say this is balloon size 1.

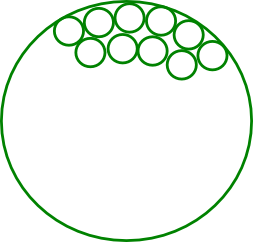

Now every request that comes to the server gets sent to one of those (cute) django processes. Then to serve the request, the process loads objects into memory. Like this

Those little bubbles are the objects loaded into memory. Once the process finishes processing a request it will clear all the objects from memory and go back to being ’empty’. It is still size 1 since all the objects fitted within the space.

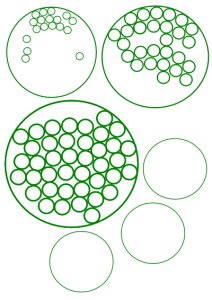

But some time the request is a bit heavier. It needs to load more objects than its size.

So the process simply inflates itself and grows a little. Easy. Now it’s size 2. More space for bubbles.

and of course, once the request finishes, it clears all those bubbles and there’s space for the next ones.

An import thing to note: The balloon (process) never shrinks. It can only grow. But this is (kind-of) ok, since it will never grow bigger than the biggest request we can get. So even a very big request (lets say one that uses 1Gb memory), we can probably handle. Right??

Not quite. So what’s the problem?

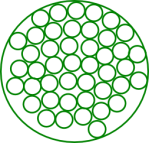

Well, like this little cute process we have other processes. Remember we have to serve more than one user at a time. So we must keep a few of those balloons running. So if more than one BIG request come at roughly the same time, they will inflate not just one balloon, but a few of those. And these balloons compete for space on the server (which is like a big room that contains the balloons, but the room does not grow).

This is our room:

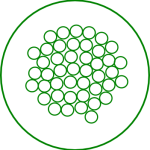

Of course we can clear the room and start empty – this is what we do when we reboot the server or even just restart django. This is what we have to do when the server crashes. When in fact the balloons grew so big that other balloons couldn’t grow any more. So rebooting all the time is not an option. When we do that, everything stops. Including requests that are being processed. Even if they’re half-way through.

So – how about we ‘pop’ those balloons every now and then – when they’re NOT processing a request (other balloons do it), and start from a small balloon? That’s actually possible. However, there are two limitations to be aware of:

- To create a new balloon takes some effort. We have to ‘make’ the balloon. While we make a balloon the others are responding slower.

- We cannot just ‘pop’ a balloon based on its size. Instead we can only create an ‘automatic balloon popper’ that pops the balloon after X requests.

Our degree of control is as follows:

- minspare – How many empty balloons do we start with. This will potentially save us effort later by having a few ready. The ‘cost’ in term of memory is the {number of balloons} X {balloon initial size}. The benefit, is saving time creating a new process for simultaneous requests. However, This parameter is not very helpful to our problem.

- maxchildren/maxspare – What is the maximum number of balloons/processes we want to have on the system. This determines the maximum number of simultaneous requests we can deal with. The ‘cost’ is the {number of balloons} X {balloon size}. The balloon size can obviously grow over time!

- maxrequests – this is the ‘auto-popper’. We can decide after how many requests we ‘pop’ a balloon and start a new one.

So if we set maxrequests too low, say 1 – then the system will work very hard to create a new process/balloon for every request. This is silly if the request is very small and doesn’t need a big balloon. With too high value however, the balloons might grow too much before they’re popped. Even if the maxrequests is 1, if we get a few requests at the same time, each causing our balloons to grow too much, we might still run out of space!

Our worse-case scenario is calculated by : {number of simultaneous requests} X {size of the request}. Lets say our server have 4Gb memory in total, which probably leaves about 3Gb memory for django itself. However, with requests that might take ~1Gb in memory (worst-case-scenario), we can only serve a maximum of 3 such requests. Not even simultaneously. Just in proximity to each other, before the server runs out of memory…

Conclusion

One of the core issues I wasn’t addressing here is obviously how to prevent high-memory usage within the django process. I hope to cover this on the next part. There are certainly some recommendations and best-practices when it comes to memory usage. However, with some types of requests, it might be impossible to avoid high-memory usage. Given enough simultaneous requests, even with optimization that leads to ‘reasonable’ memory utilization, django might still run out of memory. The minspare, maxchildren, maxspare, and most importantly maxrequests parameters are therefore crucial to having a more stable django service. It’s not a bullet-proof solution, but from my experience it helps a lot.

Sweet-Spot settings

So what are my recommended settings? I found that setting maxrequests=100 seems to give a reasonably good performance overall. Simply run django in prefork mode with something like this:

manage.py runfcgi method=prefork host=$DAEMON_HOST port=$BACKUP_DAEMON_PORT pidfile=$PIDFILE maxrequests=100

I didn’t see any need to change the default minspare, maxchildren, or maxspare parameters however.

What’s next?

On Part II I am going to cover some more advanced tweaks. Those are designed to detect and recover from situations where django runs out of memory. Using the balloon popping analogy, those tweaks/methods allow ‘popping balloons’ when memory runs out, rather than only after 100 requests. This gives another layer of protection against memory-related crashes. However, these require monitoring tools outside django. In addition, I hope to give at least some pointers on how to better utilize memory within the code.

2 replies on “django memory leaks, part I”

This is fabulous explanation of how django memory works….!

solved my website issue with 10GB memory of django process

as i described here. I just adjust max-request to=50

I have a django+uwsgi based website. some of the tables have almost 1 million rows.

After a few website usages, the VIRT memory used the uwsgi process reaches almost 20GB…almost kill my server…

Could you someone tell what may caused this problem? is it my table rows too big? (unlikely. Pinterest has much more data). now, i had to use reload-on-as= 10024 reload-on-rss= 4800 to kill the workers every few minutes….it is painful… any help?

Here is my uwsgi.ini file

The balloon (process) never shrinks.

Why? Could you explain this?