On my previous post, I described the architecture of Gimel – an A/B testing backend using AWS Lambda and redis HyperLogLog. One of the commenters suggested looking into Google BigQuery as a potential alternative backend.

It looked quite promising, with the potential of increasing result accuracy even further. HyperLogLog is pretty awesome, but trades space for accuracy. Google BigQuery offers a very affordable analytics data storage with an SQL query interface.

There was one more thing I wanted to look into and could also improve the redis backend – batching writes. The current gimel architecture writes every event directly to redis. Whilst redis itself is fast and offers low latency, the AWS Lambda architecture means we might have lots of active simultaneous connections to redis. As another commenter noted, this can become a bottleneck, particularly on lower-end redis hosting plans. In addition, any other backend that does not offer low-latency writes could benefit from batching. Even before trying out BigQuery, I knew I’d be looking at much higher latency and needed to queue and batch writes.

Choosing the right components

I wanted to look at ways of de-coupling the tracking events from the actual writes, and also batch them together. One lambda function will receive events from end-users, push them somewhere. Then another lambda function will then read all events and write them in bulk into Google BigQuery. What should this somewhere be? I looked at a few alternatives:

- Amazon SQS – Amazon Simple Queue Service seemed like a natural candidate. It does exactly what’s required to queue messages, and is very competitively priced. Write latency was pretty low (once I figured how to do it right anyway. Thanks to Mitch Garnaat).

- Amazon SNS – with a name and purpose very confusingly similar to SQS, it offers a push-notification service that plugs easily with Lambda. It provides a publish/subscribe method for passing messages along, but without much structure around data. Latency was similar to SQS.

- Invoking another lambda – this was more of a hack to avoid end-users having to wait for the data to be written. I could simply invoke another lambda to do the more time-consuming writing task. It wasn’t an ideal solution, but I wanted to try it out. It turns out that invocation latency is pretty high for some reason, even with async invocation, and I couldn’t figure out why…

Whilst investigating those options, I “bumped into” Kinesis, which seems better suited for lambda. It offers something similar to SQS, but modelled a little differently into streams instead of queues. Kinesis would plug easily into Lambda (where SQS won’t), and allows you to control the number of items to process on each batch. This simplified the lambda function and allowed more predictable runtime and memory footprint. Doing this with SQS would be far more complex and involve implementing some kind of polling and non-deterministic execution time or bandwidth. Kinesis also scales pretty neatly. If you need more capacity, simply add a shard. The nature of A/B testing events (or analytics data) makes it very easy to parallelize. There’s no strict ordering requirements. We just get an event and need to write it. Completely independently of any other event.

Kinesis can also be used with Redis to avoid too many active connections. You can pipeline writes inside one connection / lambda function instead of spreading them across many.

Architecture

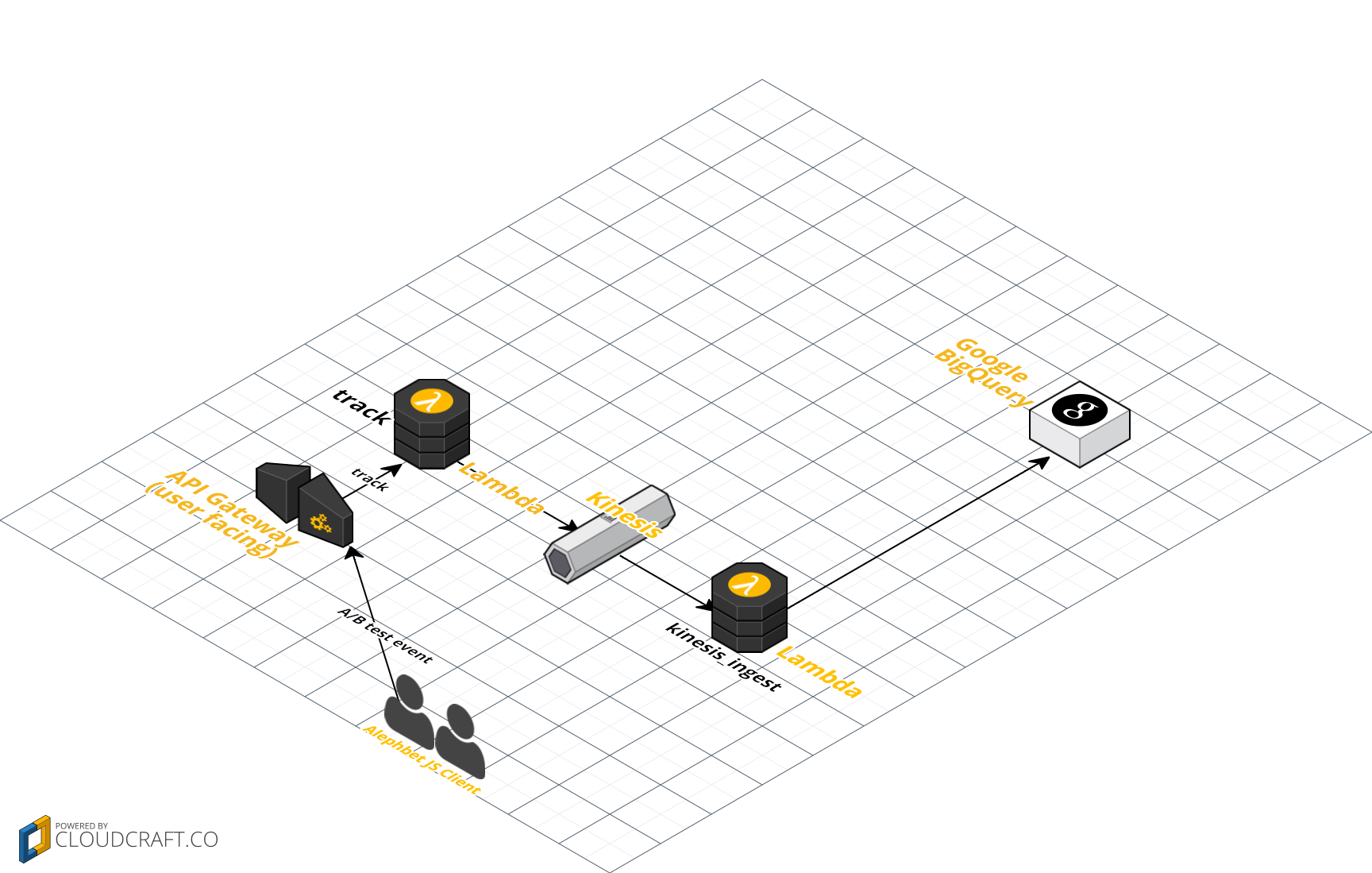

Gimel Google BigQuery Architecture with Kinesis

Refactoring Gimel to use Kinesis was very straight forward. I added a Kinesis Stream. Then updated the track method to put a record into the stream. Then created another lambda function that ingests the kinesis stream as its event source. It simply picks all events from the batch, and writes them to Google BigQuery. The whole thing fits in 58 lines of code (including comments and imports. Note however that it only handles the event tracking, not the reporting portion)…

As usual, the hardest part was figuring out which moving parts to choose, and working out the ins and outs of IAM role permissions and Google BigQuery oauth2 authentication :)

Trade-offs

There’s no such thing as a free lunch. So where are the trade-offs of this architecture?

- Price – Google BigQuery is extremely competitive, and its cost is fairly negligible unless your data is really huge. Each event takes around 100-200 bytes of data. AWS Kinesis comes with a shard-hour price of $0.015 and PUT payload of $0.014 per million records. For smaller-scale setups, this adds a minimum entry price of around $11 per month, even if you’re not pushing any data to it. This makes redis more cost-effective for the lower-end of the scale, but only by a small margin. As you scale up, I think this cost is still very competitive. To compare with the pricing calculation I did on the previous post, the redis-based architecture came out at around $100 for 15 million events. I reckon 1 shard can easily handle this load if it’s not too spiky (and probably more, with 1000 writes/second per shard). So we’re talking another $11 or so on top. 15 million events @ ~200 bytes should cost around $0.2 per month on Google BigQuery if my calculations are correct.

- Complexity and vendor lock-in – whilst the price difference is negligible, complexity increases with this solution compared to the original gimel architecture (using redis). Vendor lock-in also becomes more of a concern. The original gimel architecture uses Lambda/API gateway, but it’s fairly trivial to port it out. Not so much with this new architecture. First, we no longer rely on one vendor, but two (Amazon and Google). Second, Google BigQuery isn’t something with a clear open-source equivalent. Redis on the other hand is open-source and commoditized with competing hosting solutions. As for complexity, we replaced redis with Google BigQuery, but also added Kinesis batch processing. Kinesis isn’t inherently complex, but this de-coupling makes debugging and troubleshooting far more involved.

- Accuracy and flexibility – as I mentioned earlier, the reason for experimenting with Google BigQuery was to gain more accuracy compared to the redis HyperLogLog implementation. The benefits of this architecture are potentially much greater! HyperLogLog fits with analytics tasks that involve event counts. If you need to analyze the data differently however, then HyperLogLog might not be suitable. Building an architecture for pushing events into Google BigQuery opens up lots of opportunity for more varied analytics tasks. In that sense, Gimel with BigQuery is far more than an A/B testing backend. It offers greater flexibility in modelling and querying your analytics data.

Code?

This is still fairly experimental, but you can see the code on the bigquery branch of Gimel on Github. I’m still not entirely sure whether to merge the two together or separate them into their own independent projects.

One reply on “a scalable Analytics backend with Google BigQuery, AWS Lambda and Kinesis”

I’d definitely recommend keeping these project separated, as the requirements for this version are much more complex.